一.配置指定路由

1.服务添加labels

配置依赖于服务lables含有version才可以根据不通的版本来分割流量

例如

selector:

matchLabels:

app: nginx-php

version: v1 #匹配也太添加version

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

annotations:

prometheus.io/path: /stats/prometheus

prometheus.io/port: "15020"

prometheus.io/scrape: "true"

sidecar.istio.io/status: '{"initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["istio-envoy","istio-data","istio-podinfo","istiod-ca-cert"],"imagePullSecrets":null,"revision":"default"}'

creationTimestamp: null

labels:

app: nginx-php

security.istio.io/tlsMode: istio

service.istio.io/canonical-name: nginx-php

service.istio.io/canonical-revision: v1

version: v1 # 指定version

查看pod是否有这个labels

kubectl get po --show-labels

nginx-v1-74fb95984c-n4q7n 3/3 Running 0 147m app=nginx-php,pod-template-hash=74fb95984c,security.istio.io/tlsMode=istio,service.istio.io/canonical-name=nginx-php,service.istio.io/canonical-revision=v1,version=v1

nginx-v2-b6c55c89-dzq4z 3/3 Running 0 150m app=nginx-php,pod-template-hash=b6c55c89,security.istio.io/tlsMode=istio,service.istio.io/canonical-name=nginx-php,service.istio.io/canonical-revision=v2,version=v2

2.配置Destination Rule

设置Destination Rule的不同版本,将流量转发给不同版本的服务

kind: DestinationRule

apiVersion: networking.istio.io/v1alpha3

metadata:

name: nginx

namespace: default

labels:

provider: asm

spec:

host: nginx-php

subsets:

- name: v2

labels:

version: v2

- name: v1

labels:

version: v1

3.配置VirtualService

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: nginx-vs

namespace: default

spec:

hosts:

- www.huhuhahei.cn

gateways:

- nginx-gateway

http:

- name: nginx

route:

- destination:

host: nginx-php

subset: v1

weight: 50

- destination:

host: nginx-php

subset: v2

weight: 50

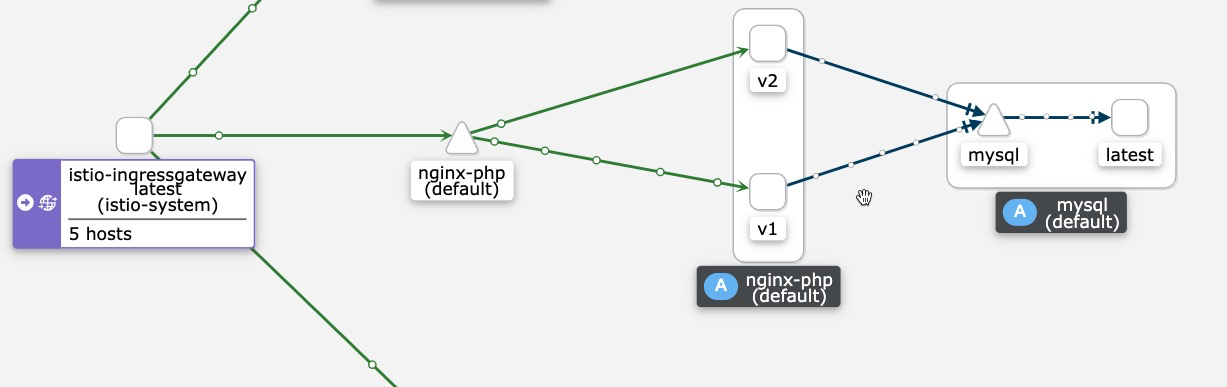

kiali查看可以发现流量是转发到不同版本的后端的

二.模拟注入故障

1.注入 HTTP 延迟故障

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: nginx-vs

namespace: default

spec:

hosts:

- www.huhuhahei.cn

gateways:

- nginx-gateway

http:

- name: nginx

route:

- destination:

host: nginx-php

subset: v1

weight: 50

- destination:

host: nginx-php

subset: v2

weight: 50

fault:

delay:

fixedDelay: 7s #注入延迟7s

percentage:

value: 100 #注入的百分比

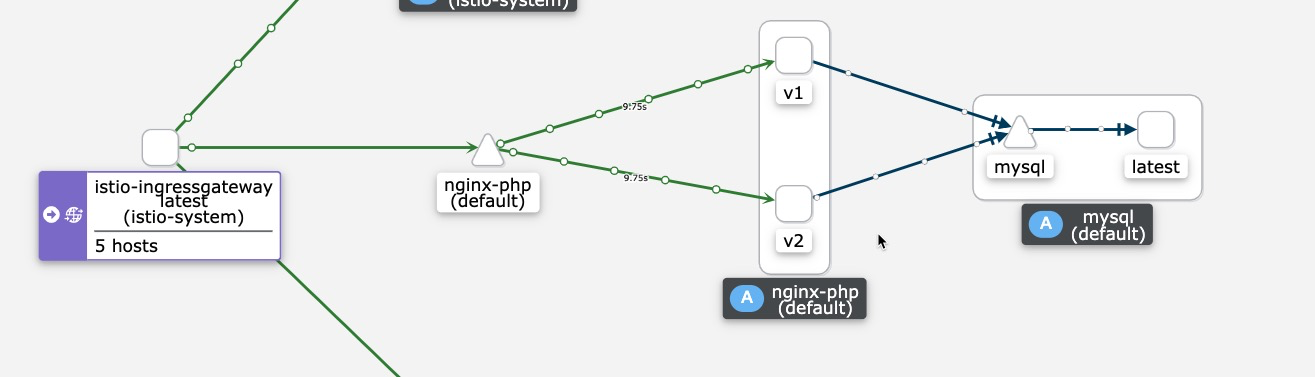

kiali可以查看到访问的平均延迟升高到9.75s

2.注入http abort 故障

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: nginx-vs

namespace: default

spec:

hosts:

- www.huhuhahei.cn

gateways:

- nginx-gateway

http:

- name: nginx

route:

- destination:

host: nginx-php

subset: v1

weight: 50

- destination:

host: nginx-php

subset: v2

weight: 50

fault:

abort:

httpStatus: 500 #注入500状态码

percentage:

value: 100 #注入的百分比

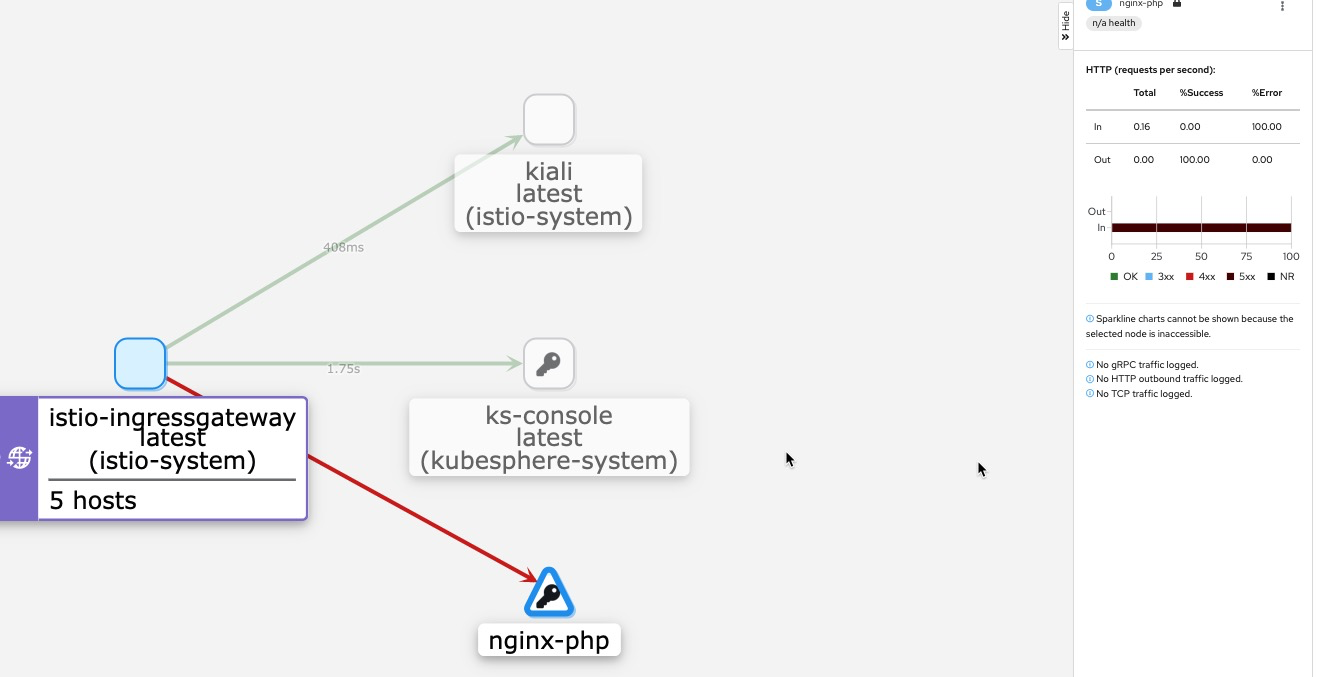

kiali查看可以发现访问全是5xx

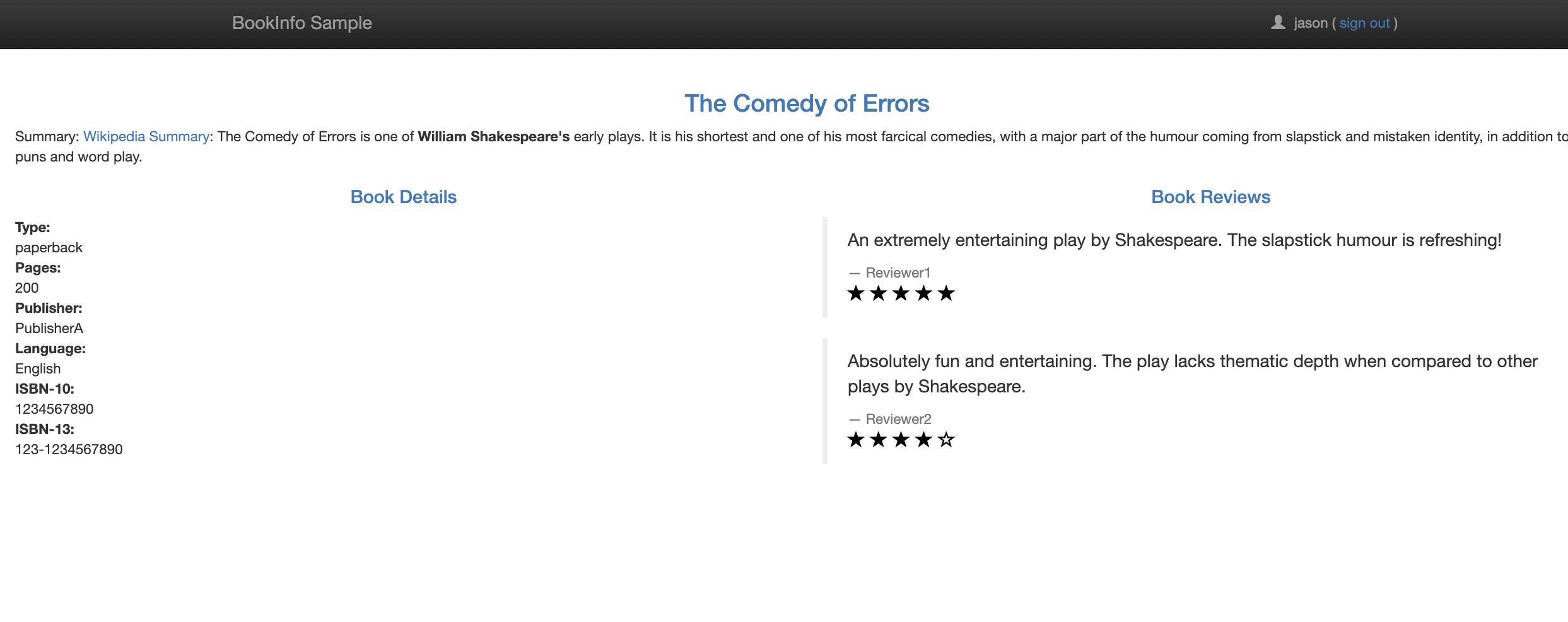

三.基于用户认证

kubectl apply -f samples/bookinfo/networking/virtual-service-reviews-test-v2.yaml

yaml文件如下

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews

...

spec:

hosts:

- reviews

http:

- match:

- headers:

end-user:

exact: jason

route:

- destination:

host: reviews

subset: v2

- route:

- destination:

host: reviews

subset: v1

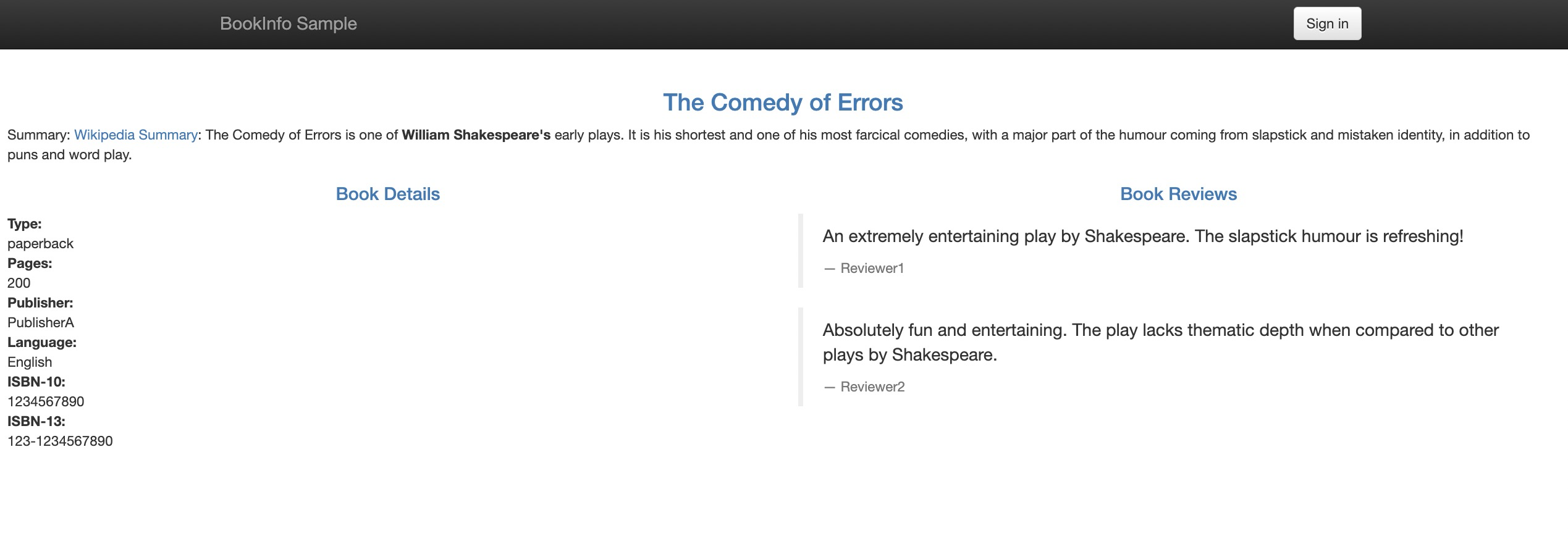

再次访问booinfo发现只能访问到reviews v1服务

登陆json用户 再次访问 发现只能访问到reviews v2服务

清理

清理

kubectl delete -f samples/bookinfo/networking/virtual-service-all-v1.yaml

四.熔断器配置

kind: DestinationRule

apiVersion: networking.istio.io/v1alpha3

metadata:

name: nginx

namespace: default

spec:

host: nginx-php

trafficPolicy:

connectionPool:

tcp:

maxConnections: 1

http:

http1MaxPendingRequests: 1

maxRequestsPerConnection: 1

outlierDetection:

consecutive5xxErrors: 1

interval: 1s

baseEjectionTime: 180s

maxEjectionPercent: 100

subsets:

- name: v2

labels:

version: v2

- name: v1

labels:

version: v1

使用工具请求触发熔断

export FORTIO_POD=$(kubectl get pods -l app=fortio -o 'jsonpath={.items[0].metadata.name}')

kubectl exec "$FORTIO_POD" -c fortio -- /usr/bin/fortio load -c 2 -qps 0 -n 20 -loglevel Warning https://www.huhuhahei.cn

07:38:03 I logger.go:128> Log level is now 3 Warning (was 2 Info)

Fortio 1.32.0 running at 0 queries per second, 2->2 procs, for 20 calls: https://www.huhuhahei.cn

Starting at max qps with 2 thread(s) [gomax 2] for exactly 20 calls (10 per thread + 0)

07:38:08 W http_client.go:825> [1] Non ok http code 503 (HTTP/1.1 503)

07:38:08 W http_client.go:825> [1] Non ok http code 503 (HTTP/1.1 503)

07:38:08 W http_client.go:825> [1] Non ok http code 503 (HTTP/1.1 503)

07:38:09 W http_client.go:825> [0] Non ok http code 503 (HTTP/1.1 503)

07:38:09 W http_client.go:825> [0] Non ok http code 503 (HTTP/1.1 503)

07:38:09 W http_client.go:825> [0] Non ok http code 503 (HTTP/1.1 503)

07:38:09 W http_client.go:825> [1] Non ok http code 503 (HTTP/1.1 503)

07:38:09 W http_client.go:825> [0] Non ok http code 503 (HTTP/1.1 503)

07:38:09 W http_client.go:825> [1] Non ok http code 503 (HTTP/1.1 503)

07:38:09 W http_client.go:825> [0] Non ok http code 503 (HTTP/1.1 503)

07:38:09 W http_client.go:825> [0] Non ok http code 503 (HTTP/1.1 503)

07:38:09 W http_client.go:825> [0] Non ok http code 503 (HTTP/1.1 503)

Ended after 5.132047777s : 20 calls. qps=3.8971

Aggregated Function Time : count 20 avg 0.51177983 +/- 0.727 min 0.000999321 max 2.04054618 sum 10.2355966

# range, mid point, percentile, count

>= 0.000999321 <= 0.001 , 0.000999661 , 5.00, 1

> 0.003 <= 0.004 , 0.0035 , 10.00, 1

> 0.004 <= 0.005 , 0.0045 , 20.00, 2

> 0.005 <= 0.006 , 0.0055 , 25.00, 1

> 0.007 <= 0.008 , 0.0075 , 35.00, 2

> 0.008 <= 0.009 , 0.0085 , 40.00, 1

> 0.014 <= 0.016 , 0.015 , 45.00, 1

> 0.016 <= 0.018 , 0.017 , 50.00, 1

> 0.025 <= 0.03 , 0.0275 , 55.00, 1

> 0.1 <= 0.12 , 0.11 , 60.00, 1

> 0.14 <= 0.16 , 0.15 , 65.00, 1

> 0.8 <= 0.9 , 0.85 , 70.00, 1

> 0.9 <= 1 , 0.95 , 75.00, 1

> 1 <= 2 , 1.5 , 95.00, 4

> 2 <= 2.04055 , 2.02027 , 100.00, 1

# target 50% 0.018

# target 75% 1

# target 90% 1.75

# target 99% 2.03244

# target 99.9% 2.03974

Error cases : count 12 avg 0.020801126 +/- 0.03857 min 0.000999321 max 0.146605165 sum 0.24961351

# range, mid point, percentile, count

>= 0.000999321 <= 0.001 , 0.000999661 , 8.33, 1

> 0.003 <= 0.004 , 0.0035 , 16.67, 1

> 0.004 <= 0.005 , 0.0045 , 33.33, 2

> 0.005 <= 0.006 , 0.0055 , 41.67, 1

> 0.007 <= 0.008 , 0.0075 , 58.33, 2

> 0.008 <= 0.009 , 0.0085 , 66.67, 1

> 0.014 <= 0.016 , 0.015 , 75.00, 1

> 0.016 <= 0.018 , 0.017 , 83.33, 1

> 0.025 <= 0.03 , 0.0275 , 91.67, 1

> 0.14 <= 0.146605 , 0.143303 , 100.00, 1

# target 50% 0.0075

# target 75% 0.016

# target 90% 0.029

# target 99% 0.145813

# target 99.9% 0.146526

Sockets used: 12 (for perfect keepalive, would be 2)

Uniform: false, Jitter: false

IP addresses distribution:

47.253.221.71:443: 2

Code 200 : 8 (40.0 %)

Code 503 : 12 (60.0 %)

Response Header Sizes : count 20 avg 92.25 +/- 113 min 0 max 231 sum 1845

Response Body/Total Sizes : count 20 avg 11177.25 +/- 1.349e+04 min 159 max 27709 sum 223545

All done 20 calls (plus 0 warmup) 511.780 ms avg, 3.9 qps

可以看到日志输出有40%是正常的200 60%的503

Code 200 : 8 (40.0 %)

Code 503 : 12 (60.0 %)

关闭熔断器重新测试,业务正常

kubectl exec "$FORTIO_POD" -c fortio -- /usr/bin/fortio load -c 2 -qps 0 -n 20 -loglevel Warning https://www.huhuhahei.cn

07:43:52 I logger.go:128> Log level is now 3 Warning (was 2 Info)

Fortio 1.32.0 running at 0 queries per second, 2->2 procs, for 20 calls: https://www.huhuhahei.cn

Starting at max qps with 2 thread(s) [gomax 2] for exactly 20 calls (10 per thread + 0)

Ended after 13.996234101s : 20 calls. qps=1.429

Aggregated Function Time : count 20 avg 1.3542445 +/- 0.783 min 0.100419758 max 2.847801559 sum 27.0848909

# range, mid point, percentile, count

>= 0.10042 <= 0.12 , 0.11021 , 5.00, 1

> 0.12 <= 0.14 , 0.13 , 15.00, 2

> 0.9 <= 1 , 0.95 , 30.00, 3

> 1 <= 2 , 1.5 , 75.00, 9

> 2 <= 2.8478 , 2.4239 , 100.00, 5

# target 50% 1.44444

# target 75% 2

# target 90% 2.50868

# target 99% 2.81389

# target 99.9% 2.84441

Error cases : no data

Sockets used: 2 (for perfect keepalive, would be 2)

Uniform: false, Jitter: false

IP addresses distribution:

47.253.221.71:443: 2

Code 200 : 20 (100.0 %)

Response Header Sizes : count 20 avg 230.55 +/- 0.5895 min 229 max 231 sum 4611

Response Body/Total Sizes : count 20 avg 27704.95 +/- 4.018 min 27700 max 27709 sum 554099

All done 20 calls (plus 0 warmup) 1354.245 ms avg, 1.4 qps