一.环境准备

创建需要的命名空间并准备持久化的数据目录

kubectl create ns logs

#创建elasticsearch和kibana的持久化目录

mkdir kibana elk

#给下全权限

chmod -R 777 kibana elk

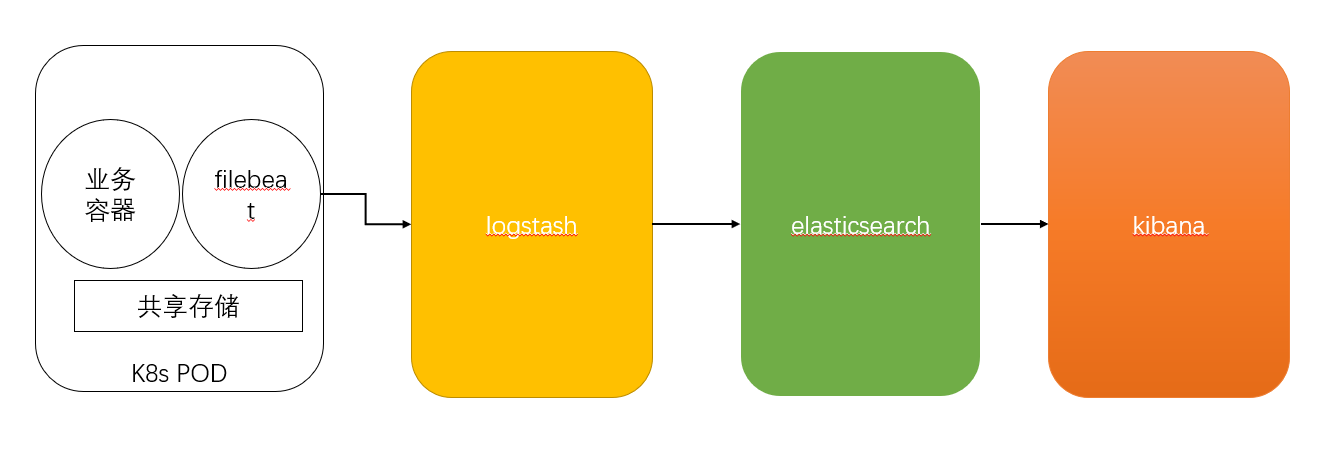

二.ELK架构

三.部署elasticsearch

3.1 部署pvc

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: elastic-logs

labels:

app: elastic-logs

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /data/elk

server: master

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: elastic-logs-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: nfs

selector:

matchLabels:

app: elastic-logs

3.2 部署deploy控制器

---

apiVersion: apps/v1

kind: Deployment

metadata:

generation: 1

labels:

app: elasticsearch-logging

version: v1

name: elasticsearch

namespace: logs

spec:

minReadySeconds: 10

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: elasticsearch-logging

version: v1

strategy:

type: Recreate

template:

metadata:

creationTimestamp: null

labels:

app: elasticsearch-logging

version: v1

spec:

affinity:

nodeAffinity: {}

containers:

- env:

- name: discovery.type

value: single-node

- name: ES_JAVA_OPTS

value: -Xms512m -Xmx512m

- name: MINIMUM_MASTER_NODES

value: "1"

image: docker.elastic.co/elasticsearch/elasticsearch:7.12.0-amd64

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 9200

timeoutSeconds: 1

readinessProbe:

failureThreshold: 1

initialDelaySeconds: 15

periodSeconds: 5

successThreshold: 1

tcpSocket:

port: 9200

timeoutSeconds: 1

name: elasticsearch-logging

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: "1"

memory: 1Gi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /usr/share/elasticsearch/data

name: es-persistent-storage

dnsPolicy: ClusterFirst

initContainers:

- command:

- /sbin/sysctl

- -w

- vm.max_map_count=262144

image: alpine:3.6

imagePullPolicy: IfNotPresent

name: elasticsearch-logging-init

resources: {}

securityContext:

privileged: true

procMount: Default

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: es-persistent-storage

persistentVolumeClaim:

claimName: elastic-logs-pvc

3.3 部署svc

apiVersion: v1

kind: Service

metadata:

namespace: logs

name: elasticsearch

labels:

app: elasticsearch-logging

spec:

type: ClusterIP

ports:

- port: 9200

name: elasticsearch

selector:

app: elasticsearch-logging

3.4 交付k8s

kubectl apply -f elastic_pvc.yaml

kubectl apply -f elastic_deploy.yaml

kubectl apply -f elastic_svc.yaml

四.配置logstash

4.1 配置logstash配置文件

---

apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-config

namespace: logs

data:

logstash.conf: |-

input {

beats {

port => 5016

}

}

filter {

grok {

match => { "message" => "%{IPORHOST:client_ip} - %{USER:auth} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:http_version})?|-)\" (%{IPORHOST:domain}|%{URIHOST:domain}|-) %{NUMBER:response} %{NUMBER:bytes} %{QS:referrer} %{QS:agent} \"(%{WORD:x_forword}|-)\" (%{URIHOST:upstream_host}|-) (%{NUMBER:upstream_response}|-) (%{WORD:upstream_cache_status}|-) %{QS:upstream_content_type} (%{BASE16FLOAT:upstream_response_time}|-) > %{BASE16FLOAT:request_time}" }

match => { "message" => "%{IPORHOST:upstream_host} - %{USER:auth} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:http_version})?|-)\" (%{IPORHOST:domain}|%{URIHOST:domain}|-) %{NUMBER:response} %{NUMBER:bytes} %{QS:referrer} %{QS:agent} \"%{IP:client_ip}\" (%{URIHOST:upstream_host}|-) (%{NUMBER:upstream_response}|-) (%{WORD:upstream_cache_status}|-) %{QS:upstream_content_type} (%{BASE16FLOAT:upstream_response_time}|-) > %{BASE16FLOAT:request_time}" }

match => { "message" => "%{IPORHOST:client_ip} - (%{USER:auth}|-) \[%{HTTPDATE:timestamp}\] \"%{WORD:verb} %{NOTSPACE:request} HTTP/%{NUMBER:http_version}\" (%{IPORHOST:domain}|%{URIHOST:domain}|-) %{NUMBER:response} %{NUMBER:bytes} %{QS:referrer} %{QS:agent} \"(%{WORD:x_forword}|%{IPORHOST:x_forword}|-)\" (%{IPORHOST:upstream_host}|-)\:%{NUMBER:upstream_port} (%{NUMBER:upstream_response}|-) (%{WORD:upstream_cache_status}|-) \"%{NOTSPACE:upstream_content_type}; charset\=%{NOTSPACE:upstream_content_charset}\" (%{BASE16FLOAT:upstream_response_time}|-) > %{BASE16FLOAT:request_time}" }

match => { "message" => "%{IPORHOST:client_ip} - (%{USER:auth}|-) \[%{HTTPDATE:timestamp}\] \"%{WORD:verb} %{NOTSPACE:request} HTTP/%{NUMBER:http_version}\" (%{IPORHOST:domain}|%{URIHOST:domain}|-) %{NUMBER:response} %{NUMBER:bytes} %{QS:referrer} %{QS:agent} \"(%{WORD:x_forword}|%{IPORHOST:x_forword}|-)\" (%{IPORHOST:upstream_host}|-)\:%{NUMBER:upstream_port} (%{NUMBER:upstream_response}|-) (%{WORD:upstream_cache_status}|-) \"%{NOTSPACE:upstream_content_type};charset\=%{NOTSPACE:upstream_content_charset}\" (%{BASE16FLOAT:upstream_response_time}|-) > %{BASE16FLOAT:request_time}" }

match => { "message" => "%{IPORHOST:client_ip} - %{USER:auth} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:http_version})?|-)\" %{NUMBER:response} (?:%{NUMBER:bytes}|-) %{QS:referrer} %{QS:agent}" }

match => { "message" => "%{IPORHOST:client_ip} - %{USER:auth} \[%{HTTPDATE:timestamp}\] \"(%{NOTSPACE:request}(?: HTTP/%{NUMBER:http_version})?|-)\" %{NUMBER:response} (?:%{NUMBER:bytes}|-) %{QS:referrer} %{QS:agent}" }

match => { "message" => "%{IPORHOST:client_ip} - %{USER:auth} \[%{HTTPDATE:timestamp}\] \"((%{NOTSPACE:request}(?: HTTP/%{NUMBER:http_version})?|-)|)\" %{NUMBER:response} (?:%{NUMBER:bytes}|-) %{QS:referrer} %{QS:agent}" }

match => { "message" => "%{IPORHOST:client_ip} - %{USER:auth} \[%{HTTPDATE:timestamp}\] %{QS:request} %{NUMBER:response} (?:%{NUMBER:bytes}|-) %{QS:referrer} %{QS:agent}" }

}

geoip {

source => "client_ip"

}

geoip {

source => "x_forword_ip"

target => "x_forword-geo"

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

}

useragent {

source => "agent"

target => "ua"

}

mutate {

split => { "x_forword" => ", "}

}

}

output {

elasticsearch {

hosts => ["10.98.214.187:9200"]

#这里是es的svc ip

manage_template => false

index => "public-ngx-alias"

}

}

4.2 配置deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: logstash

namespace: logs

labels:

name: logstash

spec:

replicas: 1

selector:

matchLabels:

name: logstash

template:

metadata:

labels:

app: logstash

name: logstash

spec:

containers:

- name: logstash

image: docker.elastic.co/logstash/logstash:7.12.0

ports:

- containerPort: 5044

protocol: TCP

- containerPort: 9600

protocol: TCP

volumeMounts:

- name: logstash-config

#mountPath: /usr/share/logstash/logstash-simple.conf

#mountPath: /usr/share/logstash/config/logstash-sample.conf

mountPath: /usr/share/logstash/pipeline/logstash.conf

subPath: logstash.conf

#ports:

# - containerPort: 80

# protocol: TCP

volumes:

- name: logstash-config

configMap:

#defaultMode: 0644

name: logstash-config

4.3 配置svc

apiVersion: v1

kind: Service

metadata:

namespace: logs

name: logstash

labels:

app: logstash

spec:

type: ClusterIP

ports:

- port: 5016

name: logstash

selector:

app: logstash

4.4 交付k8s

kubectl apply -f logstash.yaml

kubectl apply -f logstash_deploy.yaml

kubectl apply -f logstash_svc.yaml

五.配置kinana

5.1 pvc文件

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: kibana-logs

labels:

app: kibana-logs

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /data/kibana

server: master

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: kibana-logs-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: nfs

selector:

matchLabels:

app: kibana-logs

5.2 deploy文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: logs

labels:

name: kibana

spec:

replicas: 1

selector:

matchLabels:

name: kibana

template:

metadata:

labels:

app: kibana

name: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.12.0

ports:

- containerPort: 5601

protocol: TCP

livenessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 5601

timeoutSeconds: 1

readinessProbe:

failureThreshold: 1

initialDelaySeconds: 15

periodSeconds: 5

successThreshold: 1

tcpSocket:

port: 5601

timeoutSeconds: 1

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch.logs.svc.cluster.local:9200

volumeMounts:

- mountPath: /usr/share/kibana/data

name: es-persistent-storage

volumes:

- name: es-persistent-storage

persistentVolumeClaim:

claimName: kibana-logs-pvc

5.3 svc配置

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: logs

spec:

type: NodePort

ports:

- protocol: TCP

port: 5601

targetPort: 5601

selector:

app: kibana

5.4 交付k8s

kubectl apply -f kibana_pvc.yaml

kubectl apply -f kibana_deploy.yaml

kubectl apply -f kibana_svc.yaml

六.配置filebeat

6.1 deploy文件

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: filebeat

name: filebeat

namespace: logs

spec:

selector:

matchLabels:

app: filebeat

replicas: 1

template:

metadata:

labels:

app: filebeat

spec:

containers:

- image: harbor.huhuhahei.cn/huhuhahei/filebeat:6.5.1

name: filebeat

volumeMounts:

- name: filebeat-config

mountPath: /etc/filebeat.yml

subPath: filebeat.yml

- name: k8s-nginx-logs

mountPath: /usr/share/filebeat/logs

#使用配置文件启动filebeat

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 500Mi

#设置访问容器的用户ID本次设置为0即访问容器为root用户

#不设置默认容器用户为filebeat则会出现访问日志文件没权限的问题

#设置该参数使用kubelet exec登录容器的用户为root用户

securityContext:

runAsUser: 0

volumes:

- name: filebeat-config

configMap:

name: filebeat-config

#这里挂载的是nginx的日志目录

- name: k8s-nginx-logs

hostPath:

path: /data/logs/blog-blog-logs-pvc-51d8fa72-8d0b-4bf9-9271-2fc7ddc6636d

6.2 配置文件

filebeat.inputs:

- type: log

enabled: true

paths:

- /data/logs

fields:

app: k8s

type: module

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

output.logstash:

hosts: ["10.97.125.215:5016"]

processors:

- add_host_metadata:

- add_cloud_metadata:

6.3 交付k8s

kubectl create cm filebeat-config --from-file=filebeat.yml -n logs

kubectl apply -f filebeat_deploy.yaml

七.访问kibana配置索引

7.1 查看svc确认kibana的端口

kubectl get svc -n logs

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP 10.98.214.187 <none> 9200/TCP 22h

kibana NodePort 10.97.10.70 <none> 5601:30774/TCP 22h

浏览器访问ip+30774,可以参考之前的ELK文章创建索引和模板

# Linux部署ELK(二)

配置nginx转发

配置文件

apiVersion: v1

data:

nginx.conf: |

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://kibana.logs.svc.cluster.local:5601;

auth_basic "secret";

auth_basic_user_file /usr/local/nginx/conf/.test.db;

}

}

kind: ConfigMap

metadata:

name: ngin-conf

namespace: logs

deploy文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-proxy

namespace: logs

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: harbor.huhuhahei.cn/huhuhahei/lnmp_nginx:v2

ports:

- name: http

containerPort: 80

volumeMounts:

- name: ngin-conf

mountPath: /usr/local/nginx/conf/conf.d/

volumes:

- name: ngin-conf

configMap:

name: ngin-conf

items:

- key: nginx.conf

path: add.conf

svc文件

apiVersion: v1

kind: Service

metadata:

name: nginx-proxy

namespace: logs

spec:

selector:

app: nginx

ports:

- port: 80

targetPort: 80

ingress文件

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-proxy

namespace: logs

spec:

rules:

- host: kibana.huhuhahei.cn

http:

paths:

- path: /

backend:

serviceName: nginx-proxy

servicePort: 80

全部配置后需要进入容器添加密码详细配置参考这个文章

# 使用nginx做反向代理为kibana登陆添加密码